The realm of big data processing and storage has witnessed significant advancements in recent years, with various technologies emerging to cater to the diverse needs of organizations. Among these, Apache Iceberg and Delta Lake have gained prominence as popular lakehouse architectures designed to provide a more structured and efficient approach to data management. In this article, we will delve into the details of both Iceberg and Delta Lake, comparing their features, functionalities, and use cases to help you make an informed decision for your data management needs.

Introduction to Apache Iceberg and Delta Lake

Apache Iceberg is an open-table format that enables efficient and scalable data management across various engines and platforms. It was initially developed by Netflix and later became an Apache Incubator project, gaining widespread recognition for its capabilities in handling large-scale data storage and processing. On the other hand, Delta Lake is an open-source storage layer developed by Databricks, designed to provide a reliable and efficient way to store and manage data in the cloud. Both technologies aim to address the limitations of traditional data warehousing solutions by offering a more flexible, scalable, and cost-effective alternative.

Key Points

- Apache Iceberg and Delta Lake are lakehouse architectures designed for efficient data management.

- Iceberg is an open-table format for scalable data management, while Delta Lake is a storage layer for reliable data storage.

- Both technologies aim to overcome traditional data warehousing limitations with flexibility, scalability, and cost-effectiveness.

- Iceberg focuses on metadata management and data versioning, whereas Delta Lake emphasizes data reliability and performance.

- The choice between Iceberg and Delta Lake depends on specific use cases, data management needs, and compatibility with existing infrastructure.

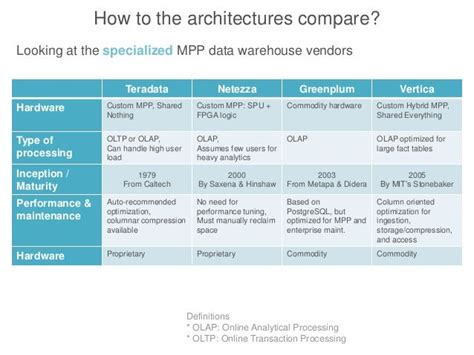

Architecture and Design Principles

Apache Iceberg’s architecture is centered around its open-table format, which allows for efficient data storage and querying. It utilizes a metadata-driven approach, enabling seamless integration with various data processing engines. Iceberg’s design principles focus on simplicity, flexibility, and scalability, making it an attractive choice for organizations dealing with large-scale data sets. In contrast, Delta Lake’s architecture is built around its transactional storage layer, which provides a reliable and efficient way to store and manage data. Delta Lake’s design principles emphasize data reliability, performance, and compatibility with existing data pipelines.

| Feature | Apache Iceberg | Delta Lake |

|---|---|---|

| Architecture | Open-table format | Transactional storage layer |

| Design Principles | Simplicity, flexibility, scalability | Data reliability, performance, compatibility |

| Data Management | Metadata-driven approach | Storage layer with transactional support |

Comparison of Features and Functionalities

A thorough comparison of Apache Iceberg and Delta Lake reveals both similarities and differences in their features and functionalities. Both technologies support ACID transactions, enabling reliable and efficient data management. However, Iceberg’s focus on metadata management and data versioning sets it apart from Delta Lake, which emphasizes data reliability and performance. Iceberg’s open-table format allows for seamless integration with various data processing engines, whereas Delta Lake’s storage layer is designed for compatibility with existing data pipelines.

In terms of data versioning, Apache Iceberg provides a robust mechanism for tracking changes to data, ensuring data consistency and reliability. Delta Lake, on the other hand, relies on its transactional storage layer to manage data versions, providing a high level of data integrity and consistency. Both technologies support data partitioning, enabling efficient data storage and querying. However, Iceberg's partitioning scheme is more flexible, allowing for custom partitioning strategies.

Use Cases and Deployment Scenarios

Apache Iceberg and Delta Lake can be deployed in various scenarios, catering to different data management needs. Iceberg is well-suited for use cases involving large-scale data storage and processing, such as data warehousing, data lakes, and real-time analytics. Its open-table format and metadata-driven approach make it an attractive choice for organizations seeking a flexible and scalable data management solution. Delta Lake, on the other hand, is designed for use cases requiring high data reliability and performance, such as financial transactions, IoT data processing, and machine learning workflows.

In terms of deployment, both technologies can be integrated with existing data pipelines and infrastructure. Iceberg supports deployment on various platforms, including cloud, on-premises, and hybrid environments. Delta Lake is designed for cloud-native deployment, providing seamless integration with cloud-based data services and platforms.

What is the primary difference between Apache Iceberg and Delta Lake?

+The primary difference between Apache Iceberg and Delta Lake lies in their design principles and architectures. Iceberg focuses on metadata management and data versioning, whereas Delta Lake emphasizes data reliability and performance.

Which technology is better suited for large-scale data storage and processing?

+Apache Iceberg is well-suited for large-scale data storage and processing due to its open-table format and metadata-driven approach, providing a flexible and scalable solution for data management.

Can Delta Lake be used for real-time analytics and data warehousing?

+While Delta Lake is designed for high data reliability and performance, it can be used for real-time analytics and data warehousing. However, its primary focus is on providing a transactional storage layer for reliable data management.

In conclusion, Apache Iceberg and Delta Lake are both powerful technologies designed to address the limitations of traditional data warehousing solutions. By understanding the unique features, functionalities, and use cases of each technology, organizations can make informed decisions about their data management needs. Whether you prioritize metadata management, data versioning, or data reliability, there is a lakehouse architecture that can cater to your specific requirements.