Convolutional Neural Networks (CNNs) have become a cornerstone in the realm of image and video processing, offering unparalleled performance in tasks such as object detection, image classification, and segmentation. A fundamental aspect of working with CNNs is understanding how to calculate their output, which is crucial for training, testing, and deploying these models in various applications. This article delves into the primary methods of calculating CNN outputs, providing a comprehensive overview for both beginners and seasoned professionals in the field.

Key Points

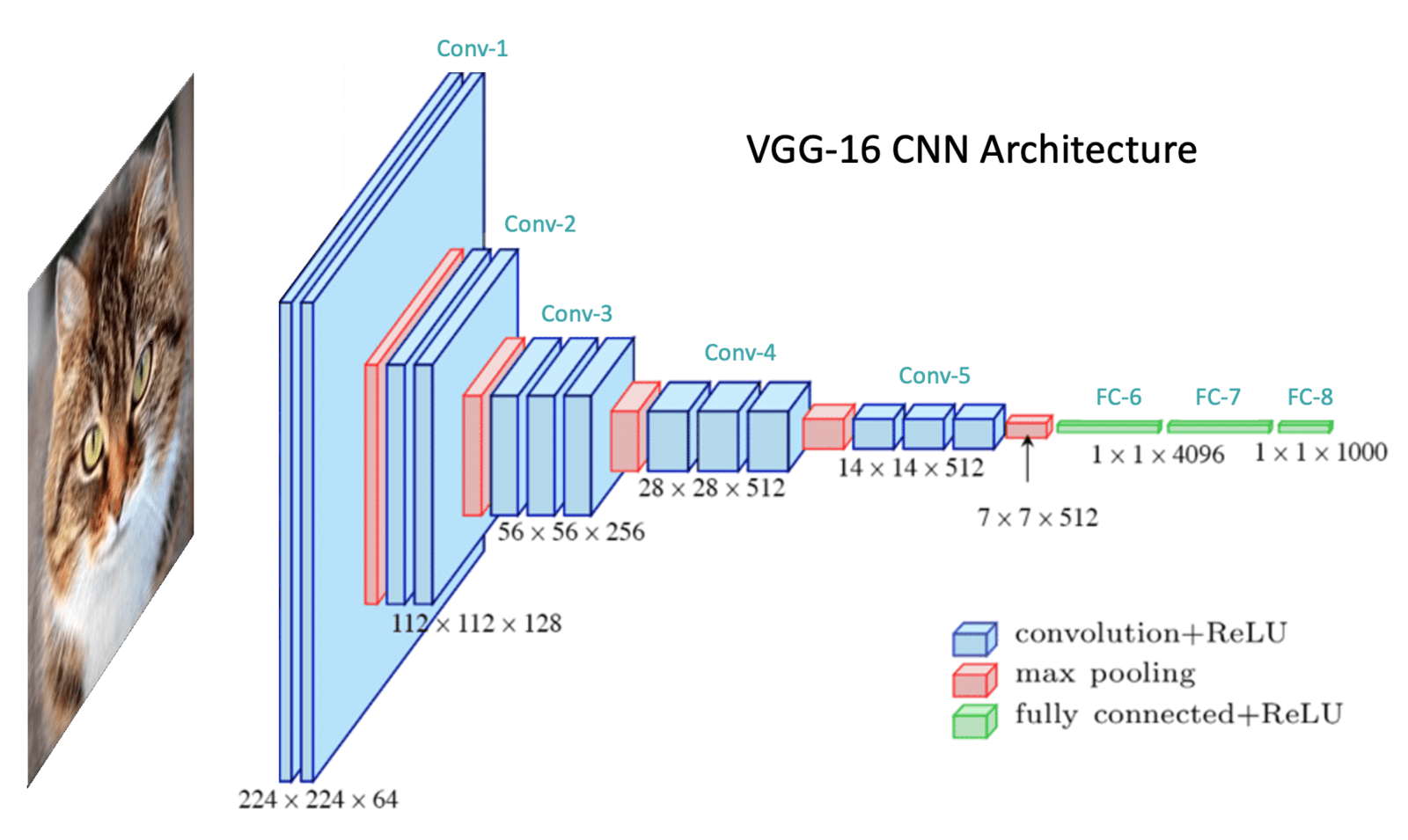

- Understanding the basic architecture of CNNs, including convolutional layers, pooling layers, and fully connected layers.

- Calculating output dimensions after convolutional and pooling layers using specific formulas.

- Applying the softmax activation function for multi-class classification tasks.

- Utilizing the ReLU activation function for hidden layers to introduce non-linearity.

- Implementing forward propagation to compute the output of a CNN for a given input.

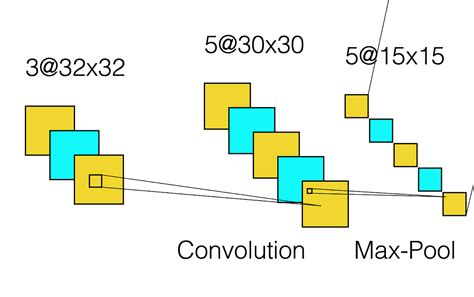

Calculating Output Dimensions

Before diving into the detailed calculation of CNN outputs, it’s essential to understand how the dimensions of the output change as the input passes through various layers. The output dimensions after a convolutional layer can be calculated using the formula: output_height = (input_height - filter_height + 2 * padding) / stride + 1 and similarly for the width. This formula helps in understanding how different parameters like filter size, padding, and stride affect the output dimensions.

Convolutional Layer Output Calculation

A convolutional layer’s output is calculated by sliding filters over the input, performing a dot product at each position to generate feature maps. The number of feature maps is determined by the number of filters used. Each filter learns to detect specific features, and the collection of these features forms the output of the convolutional layer. The formula for calculating the output of a convolutional layer is output = σ(W * input + b), where σ is the activation function, W is the weight matrix, input is the input to the layer, and b is the bias term.

| Layer Type | Output Calculation Formula |

|---|---|

| Convolutional Layer | output = σ(W * input + b) |

| Pooling Layer | output = pool(input, kernel_size, stride) |

| Fully Connected Layer | output = σ(W * input + b) |

Forward Propagation for Output Calculation

Forward propagation is the process of passing input through the network to calculate the output. This involves applying the activation functions, pooling, and convolution operations sequentially. For each layer, the output is calculated based on the inputs, weights, and biases, and then passed through an activation function. The process continues until the final output is obtained from the last layer of the network.

Output Calculation for Classification Tasks

In classification tasks, the final layer typically uses the softmax activation function to ensure the output values are probabilities that sum up to 1. The softmax function is defined as softmax(x) = exp(x) / Σ exp(x) for all elements in the input vector x. This ensures that the output can be interpreted as probabilities of the input belonging to each class.

How do you calculate the output dimensions after a convolutional layer?

+The output dimensions after a convolutional layer can be calculated using the formula: output_height = (input_height - filter_height + 2 * padding) / stride + 1. A similar formula applies for calculating the output width.

What is the role of the softmax activation function in CNNs?

+The softmax activation function is used in the output layer of CNNs for multi-class classification tasks. It ensures that the output values are probabilities that sum up to 1, allowing for the interpretation of the output as probabilities of the input belonging to each class.

How does forward propagation calculate the output of a CNN?

+Forward propagation involves passing the input through the network, applying activation functions, pooling, and convolution operations sequentially. For each layer, the output is calculated based on the inputs, weights, and biases, and then passed through an activation function until the final output is obtained.

In conclusion, calculating the output of a CNN involves understanding the architecture, including convolutional and pooling layers, and how these layers affect the output dimensions. The use of appropriate activation functions, such as softmax for classification tasks, is also crucial. By applying these principles and using forward propagation, one can compute the output of a CNN for a given input, which is essential for training, testing, and deploying these models in real-world applications.