Creating dataset features is a crucial step in the machine learning pipeline, as it directly impacts the performance of the models. With the increasing complexity of datasets, it's essential to have efficient methods for feature creation. In this article, we will explore the best practices and techniques for creating dataset features easily, leveraging domain expertise and technical accuracy.

Key Points

- Understanding the importance of feature creation in machine learning

- Techniques for creating dataset features, including data transformation and feature extraction

- Best practices for feature creation, such as handling missing values and data normalization

- Tools and libraries for efficient feature creation, including Python and R

- Real-world examples of successful feature creation in various domains

Introduction to Feature Creation

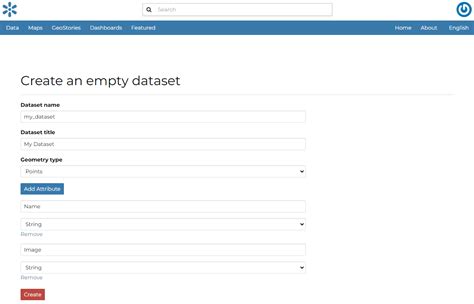

Feature creation is the process of transforming and combining raw data into meaningful features that can be used by machine learning algorithms. The goal is to create features that are informative, relevant, and useful for the model to learn from. Effective feature creation can significantly improve the performance of machine learning models, while poor feature creation can lead to suboptimal results.

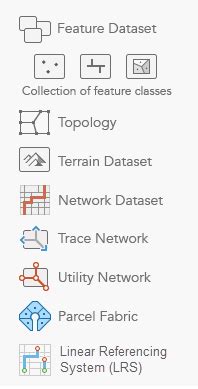

Types of Feature Creation

There are several types of feature creation, including:

- Data Transformation: This involves transforming existing features into new ones, such as converting categorical variables into numerical variables.

- Feature Extraction: This involves extracting new features from existing ones, such as extracting keywords from text data.

- Feature Construction: This involves creating new features from scratch, such as creating a new feature that combines multiple existing features.

Techniques for Feature Creation

There are several techniques for feature creation, including:

Data Transformation Techniques

Data transformation techniques involve transforming existing features into new ones. Some common techniques include:

- Scaling: This involves scaling numerical features to a common range, such as between 0 and 1.

- Encoding: This involves converting categorical variables into numerical variables, such as using one-hot encoding.

- Normalizing: This involves normalizing numerical features to have a mean of 0 and a standard deviation of 1.

Feature Extraction Techniques

Feature extraction techniques involve extracting new features from existing ones. Some common techniques include:

- Principal Component Analysis (PCA): This involves extracting the most important features from a dataset using PCA.

- t-Distributed Stochastic Neighbor Embedding (t-SNE): This involves extracting features that preserve the local structure of the data using t-SNE.

- Word Embeddings: This involves extracting features from text data using word embeddings, such as Word2Vec or GloVe.

Best Practices for Feature Creation

There are several best practices for feature creation, including:

Handling Missing Values

Missing values can significantly impact the performance of machine learning models. Some common techniques for handling missing values include:

- Imputation: This involves imputing missing values using statistical methods, such as mean or median imputation.

- Interpolation: This involves interpolating missing values using interpolation methods, such as linear or polynomial interpolation.

Data Normalization

Data normalization is essential for feature creation, as it ensures that all features are on the same scale. Some common techniques for data normalization include:

- Min-Max Scaling: This involves scaling numerical features to a common range, such as between 0 and 1.

- Standardization: This involves standardizing numerical features to have a mean of 0 and a standard deviation of 1.

Tools and Libraries for Feature Creation

There are several tools and libraries available for feature creation, including:

Python Libraries

Python is a popular language for feature creation, and there are several libraries available, including:

- Pandas: This is a popular library for data manipulation and analysis.

- NumPy: This is a popular library for numerical computing.

- Scikit-learn: This is a popular library for machine learning.

R Libraries

R is another popular language for feature creation, and there are several libraries available, including:

- dplyr: This is a popular library for data manipulation and analysis.

- tidyr: This is a popular library for data transformation and feature creation.

- caret: This is a popular library for machine learning.

Real-World Examples of Feature Creation

Feature creation is a critical step in many real-world applications, including:

Image Classification

In image classification, feature creation involves extracting features from images, such as edges, textures, and shapes. Some common techniques include:

- Convolutional Neural Networks (CNNs): This involves using CNNs to extract features from images.

- Transfer Learning: This involves using pre-trained models to extract features from images.

Natural Language Processing (NLP)

In NLP, feature creation involves extracting features from text data, such as keywords, sentiments, and topics. Some common techniques include:

- Word Embeddings: This involves using word embeddings to extract features from text data.

- Topic Modeling: This involves using topic modeling to extract features from text data.

| Feature Creation Technique | Description |

|---|---|

| Data Transformation | Transforming existing features into new ones |

| Feature Extraction | Extracting new features from existing ones |

| Feature Construction | Creating new features from scratch |

What is feature creation in machine learning?

+Feature creation is the process of transforming and combining raw data into meaningful features that can be used by machine learning algorithms.

What are some common techniques for feature creation?

+Some common techniques for feature creation include data transformation, feature extraction, and feature construction.

What are some best practices for feature creation?

+Some best practices for feature creation include handling missing values, data normalization, and using the right tools and libraries.

In conclusion, feature creation is a critical step in the machine learning pipeline, and there are several techniques and tools available for creating dataset features easily. By understanding the importance of feature creation and using the right techniques and tools, you can create features that are informative, relevant, and useful for your machine learning models.