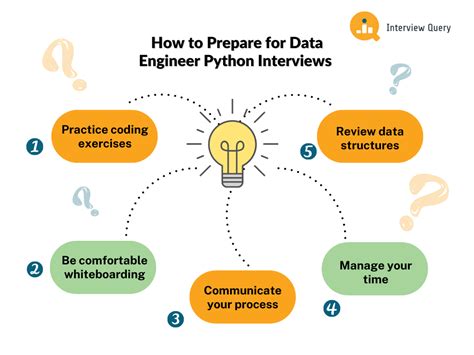

As a data engineer, being proficient in Python is essential for building, maintaining, and optimizing large-scale data systems. Python's simplicity, flexibility, and extensive libraries make it a popular choice among data engineers. In this article, we will explore some common Python data engineer interview questions, providing insights into the types of questions you may encounter and how to approach them.

Key Points

- Understanding of Python basics, including data structures and file handling

- Familiarity with popular data engineering libraries such as Pandas, NumPy, and Apache Spark

- Knowledge of data processing techniques, including data cleaning, transformation, and aggregation

- Experience with data storage solutions, including relational databases and NoSQL databases

- Ability to design and implement data pipelines, including data ingestion, processing, and output

Python Fundamentals

Before diving into data engineering-specific questions, interviewers often assess your Python skills. Be prepared to answer questions about Python basics, such as data structures (lists, dictionaries, sets), file handling, and object-oriented programming concepts.

Data Structures

Data structures are a crucial aspect of Python programming. You should be able to explain the differences between lists, dictionaries, and sets, and provide examples of when to use each. For instance, you can use a list to store a collection of items, a dictionary to store key-value pairs, and a set to store unique items.

| Data Structure | Description |

|---|---|

| List | Ordered collection of items |

| Dictionary | Unordered collection of key-value pairs |

| Set | Unordered collection of unique items |

Data Engineering Libraries

Pandas, NumPy, and Apache Spark are popular libraries used in data engineering. You should be familiar with their features and use cases. For example, Pandas is commonly used for data manipulation and analysis, while NumPy is used for numerical computations.

Pandas

Pandas is a powerful library for data manipulation and analysis. You should be able to explain how to use Pandas to read and write data from various file formats, such as CSV and Excel. Additionally, you should be familiar with Pandas data structures, including Series and DataFrames.

Data Processing Techniques

Data processing is a critical aspect of data engineering. You should be able to explain various data processing techniques, including data cleaning, transformation, and aggregation. For instance, you can use Pandas to clean and transform data, and then use NumPy to perform numerical computations.

Data Cleaning

Data cleaning is the process of removing errors and inconsistencies from data. You should be able to explain how to use Pandas to handle missing data, remove duplicates, and perform data normalization.

| Data Cleaning Technique | Description |

|---|---|

| Handling Missing Data | Removing or replacing missing values |

| Removing Duplicates | Removing duplicate rows or columns |

| Data Normalization | Scaling data to a common range |

Data Storage Solutions

Data storage solutions are essential for storing and retrieving large amounts of data. You should be familiar with relational databases, such as MySQL, and NoSQL databases, such as MongoDB. For example, you can use MySQL to store structured data and MongoDB to store unstructured data.

Relational Databases

Relational databases are designed to store structured data. You should be able to explain how to design and implement a relational database schema, including tables, indexes, and constraints.

Data Pipelines

Data pipelines are used to process and transform data from various sources. You should be able to explain how to design and implement a data pipeline, including data ingestion, processing, and output. For instance, you can use Apache Spark to process large-scale data and Pandas to analyze and visualize the results.

Data Ingestion

Data ingestion is the process of collecting and processing data from various sources. You should be able to explain how to use tools such as Apache Kafka and Apache Flume to ingest data from sources such as logs, social media, and IoT devices.

| Data Ingestion Tool | Description |

|---|---|

| Apache Kafka | Distributed streaming platform |

| Apache Flume | Distributed, reliable, and available system for efficiently collecting, aggregating, and moving large amounts of log data |

What is the difference between a data engineer and a data scientist?

+A data engineer is responsible for designing, building, and maintaining large-scale data systems, while a data scientist is responsible for analyzing and interpreting complex data to gain insights and make informed decisions.

What are some common data engineering tools and technologies?

+Some common data engineering tools and technologies include Apache Hadoop, Apache Spark, Apache Kafka, Apache Flume, and cloud-based platforms such as Amazon Web Services (AWS) and Microsoft Azure.

What is the importance of data quality in data engineering?

+Data quality is essential in data engineering because it ensures that the data is accurate, complete, and consistent, which is critical for making informed decisions and gaining insights.

In conclusion, Python data engineer interview questions are designed to assess your skills and knowledge in Python programming, data engineering libraries, data processing techniques, data storage solutions, and data pipelines. By preparing for these types of questions, you can demonstrate your expertise and increase your chances of success in a data engineering role.