Welcome to the world of data engineering, a field that has gained significant importance in recent years due to the exponential growth of data. As a data engineer, you will play a crucial role in designing, building, and maintaining the infrastructure that stores, processes, and retrieves data. In this comprehensive guide, we will take you through the basics of data engineering, covering the key concepts, tools, and techniques that you need to get started.

Key Points

- Understanding the role of a data engineer and their responsibilities

- Familiarity with data engineering concepts, including data warehousing, ETL, and data pipelines

- Knowledge of popular data engineering tools, such as Apache Hadoop, Apache Spark, and NoSQL databases

- Understanding of data storage solutions, including relational databases and cloud-based storage options

- Introduction to data processing frameworks, including batch processing and real-time processing

Introduction to Data Engineering

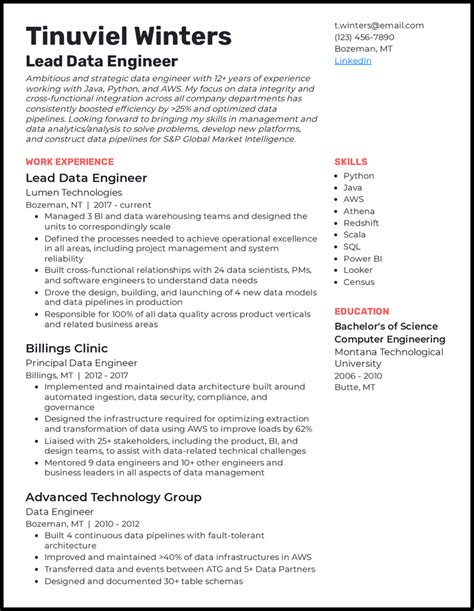

Data engineering is a field that combines elements of software engineering, data science, and data analysis to design and build systems that can handle large amounts of data. As a data engineer, your primary responsibility will be to ensure that data is properly collected, stored, processed, and retrieved. This involves working with various tools and technologies, including databases, data warehouses, ETL (Extract, Transform, Load) tools, and data processing frameworks.

The role of a data engineer is multifaceted and requires a strong understanding of computer science, software engineering, and data analysis. Some of the key responsibilities of a data engineer include:

- Designing and building data pipelines to extract data from various sources

- Developing and maintaining data warehouses and data lakes

- Implementing ETL processes to transform and load data into target systems

- Ensuring data quality and integrity by implementing data validation and data cleansing processes

- Optimizing data storage and retrieval systems for performance and scalability

Data Engineering Concepts

To get started with data engineering, you need to understand some key concepts, including:

- Data warehousing: a central repository that stores data from various sources in a single location

- ETL: a process that extracts data from source systems, transforms it into a standardized format, and loads it into target systems

- Data pipelines: a series of processes that extract, transform, and load data from source systems to target systems

- Data lakes: a centralized repository that stores raw, unprocessed data in its native format

These concepts form the foundation of data engineering, and understanding them is crucial for building scalable and efficient data systems.

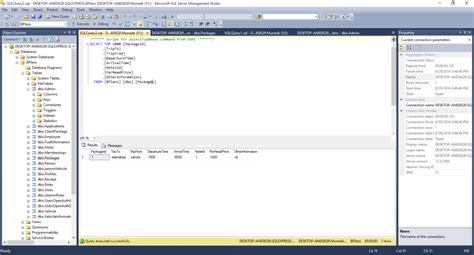

Data Engineering Tools and Technologies

The data engineering landscape is dominated by a variety of tools and technologies, including:

- Apache Hadoop: an open-source framework for processing large datasets

- Apache Spark: an open-source data processing engine that provides high-performance processing of large datasets

- NoSQL databases: a type of database that stores data in a variety of formats, including key-value, document, and graph databases

- Cloud-based storage options: such as Amazon S3, Google Cloud Storage, and Microsoft Azure Blob Storage

These tools and technologies provide a robust framework for building scalable and efficient data systems.

Data Storage Solutions

Data storage is a critical component of data engineering, and there are several options available, including:

- Relational databases: a type of database that stores data in tables with well-defined relationships between them

- NoSQL databases: a type of database that stores data in a variety of formats, including key-value, document, and graph databases

- Cloud-based storage options: such as Amazon S3, Google Cloud Storage, and Microsoft Azure Blob Storage

Each of these options has its strengths and weaknesses, and the choice of data storage solution depends on the specific requirements of the project.

Data Processing Frameworks

Data processing is a critical component of data engineering, and there are several frameworks available, including:

- Batch processing: a framework that processes data in batches, typically using a scheduled job

- Real-time processing: a framework that processes data in real-time, typically using a streaming architecture

These frameworks provide a robust framework for building scalable and efficient data systems.

| Framework | Description |

|---|---|

| Apache Hadoop | An open-source framework for processing large datasets |

| Apache Spark | An open-source data processing engine that provides high-performance processing of large datasets |

| NoSQL databases | A type of database that stores data in a variety of formats, including key-value, document, and graph databases |

Best Practices for Data Engineering

To ensure that your data systems are scalable, efficient, and reliable, follow these best practices:

- Design your data systems with scalability in mind

- Use data validation and data cleansing processes to ensure data quality and integrity

- Optimize your data storage and retrieval systems for performance

- Use data processing frameworks that provide high-performance processing of large datasets

By following these best practices, you can ensure that your data systems are reliable, efficient, and scalable.

What is the role of a data engineer?

+A data engineer is responsible for designing, building, and maintaining the infrastructure that stores, processes, and retrieves data.

What are some key concepts in data engineering?

+Some key concepts in data engineering include data warehousing, ETL, data pipelines, and data lakes.

What are some popular data engineering tools and technologies?

+Some popular data engineering tools and technologies include Apache Hadoop, Apache Spark, NoSQL databases, and cloud-based storage options.

Meta Description: Get started with data engineering and learn the key concepts, tools, and techniques required to design, build, and maintain scalable and efficient data systems.