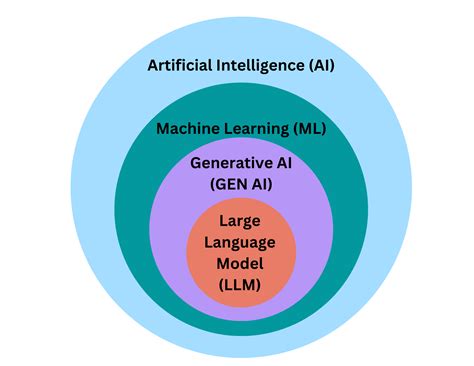

The landscape of artificial intelligence has witnessed significant advancements in recent years, with the emergence of large models and generative AI being two of the most notable developments. While both concepts are often mentioned in the context of AI research, they represent distinct approaches to achieving intelligent systems. In this article, we will delve into the world of generative AI and large models, exploring their principles, applications, and the implications they have for the future of artificial intelligence.

Key Points

- Generative AI focuses on creating new content or data that resembles existing patterns, with applications in image and text generation.

- Large models, particularly large language models, have shown remarkable capabilities in understanding and generating human-like text, but also come with challenges such as energy consumption and bias.

- The training of large models requires vast amounts of data and computational resources, posing ethical and environmental concerns.

- Generative AI and large models can complement each other, with generative models potentially improving the efficiency and diversity of large model outputs.

- Future developments in AI will likely involve a combination of generative techniques and large-scale modeling, aimed at creating more versatile, efficient, and responsible AI systems.

Understanding Generative AI

Generative AI refers to a subset of artificial intelligence that is capable of generating new, synthetic data that resembles existing data. This can include images, videos, music, or text. The core idea behind generative AI is to learn the patterns and structures present in a dataset and then use this understanding to create novel, yet realistic, data points. Generative models, such as Generative Adversarial Networks (GANs) and Variational Autoencoders (VAEs), are commonly used in generative AI applications.

Applications of Generative AI

One of the most visible applications of generative AI is in the creation of deepfakes—images or videos that have been manipulated to replace the face of one person with another’s. While deepfakes have raised significant ethical concerns, generative AI also has positive applications, such as generating synthetic data for training other AI models, creating artwork, or even composing music. In the realm of text, generative AI can be used to create coherent and contextually relevant text based on a given prompt or style.

Large Models: The Rise of Large Language Models

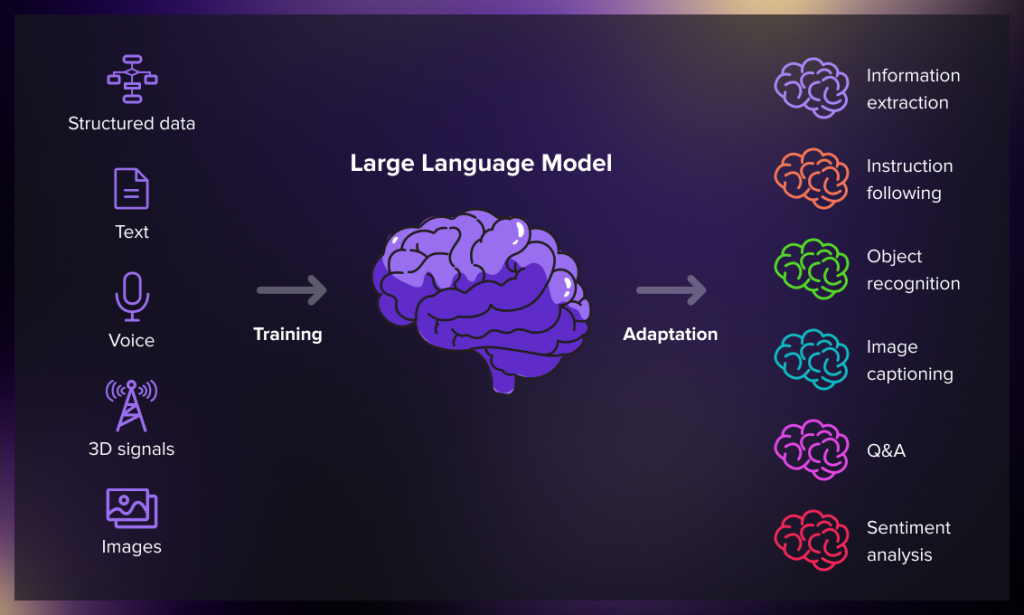

Large models, especially large language models (LLMs), have made headlines in the AI community due to their unprecedented ability to process and generate human-like text. Models like BERT, RoBERTa, and more recently, models from the transformer family, have demonstrated remarkable performance in a variety of natural language processing (NLP) tasks, from question answering and text classification to language translation and text generation.

Training Large Models

The training of large models requires enormous datasets and significant computational resources. For instance, the training of a model like Google’s BERT involved a dataset that included the entirety of Wikipedia and a massive corpus of books, along with a substantial amount of computational power. This scale of training not only poses technical challenges but also raises ethical concerns regarding the environmental impact of such large-scale computations and the potential for biases present in the training data to be amplified in the model’s outputs.

| Model | Training Data Size | Computational Resources |

|---|---|---|

| BERT | 16 GB | 4-16 Cloud TPUs |

| RoBERTa | 160 GB | Up to 256 Cloud TPUs |

| Transformers | Varying, up to 1.5 TB | Thousands of GPUs |

Comparing Generative AI and Large Models

While both generative AI and large models are groundbreaking in their respective capabilities, they approach the challenge of creating intelligent systems from different angles. Generative AI focuses on the generation of new data, potentially offering a more flexible and creative approach to AI applications. Large models, on the other hand, have excelled in understanding and processing existing data, with a focus on accuracy and reliability in tasks such as language translation and question answering.

Future Directions: Integrating Generative AI and Large Models

The future of AI may lie in the integration of generative techniques with the capabilities of large models. By leveraging the strengths of both approaches, researchers could develop AI systems that are not only highly performant but also more efficient, diverse, and responsible. Generative models could potentially aid in the creation of synthetic data, reducing the need for real-world data and mitigating some of the ethical concerns associated with large-scale data collection and model training.

In conclusion, the realms of generative AI and large models represent two of the most exciting and rapidly evolving areas of artificial intelligence research. As these technologies continue to advance, it will be crucial to address the challenges they pose, from ethical considerations to environmental impact, to ensure that their development aligns with societal values and contributes positively to human life and society.

What are the primary differences between generative AI and large models?

+Generative AI focuses on creating new, synthetic data that resembles existing patterns, whereas large models, especially large language models, are designed to understand and generate human-like text based on vast amounts of training data.

What are some of the applications of generative AI?

+Applications of generative AI include the creation of synthetic data for training AI models, generating artwork, composing music, and creating deepfakes, among others.

What are the challenges associated with training large models?

+The training of large models requires enormous datasets and significant computational resources, posing ethical concerns regarding energy consumption and the potential for biases in the training data to be amplified in the model’s outputs.

How might generative AI and large models be integrated in the future?

+Future developments may involve using generative models to aid in the creation of synthetic data, reducing the need for real-world data and potentially mitigating some of the ethical concerns associated with large-scale model training.

What are the implications of these technologies for the future of AI research?

+The integration of generative AI and large models could lead to the development of more versatile, efficient, and responsible AI systems, offering new possibilities for applications across various industries and societal domains.