The concept of bytes and characters is fundamental in the realm of computer science and information technology. Understanding the relationship between these two is crucial for managing and processing data efficiently. A byte, which is a unit of digital information, typically consists of 8 bits and can represent a wide range of values. On the other hand, a character, which is a single symbol in a written language, can be represented by one or more bytes, depending on the encoding scheme used.

Key Points

- The size of a character in bytes varies based on the character encoding used, such as ASCII, UTF-8, or UTF-16.

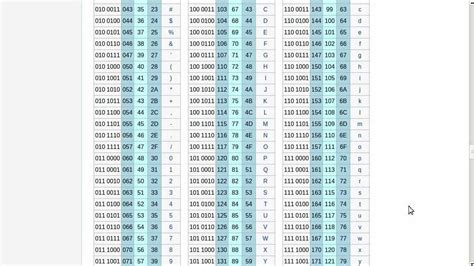

- In ASCII, each character is represented by 1 byte, allowing for 128 unique characters.

- UTF-8 is a variable-length encoding where a character can be represented by 1 to 4 bytes, enabling the representation of a much larger character set, including most languages.

- UTF-16, on the other hand, typically uses 2 bytes per character but can use 4 bytes for some characters, providing a balance between character representation and storage efficiency.

- The choice of character encoding affects not only storage requirements but also data processing and communication protocols.

Character Encoding and Byte Representation

Character encoding schemes are designed to translate characters into binary code that computers can understand. The most common encoding schemes include ASCII, UTF-8, and UTF-16, each with its own method of representing characters as bytes.

ASCII Encoding

ASCII (American Standard Code for Information Interchange) is one of the earliest and simplest encoding schemes. It uses 7 bits to represent each character, allowing for a total of 128 unique characters. Since a byte is 8 bits, ASCII characters fit within a single byte, with the most significant bit typically being 0. This encoding is sufficient for representing English characters, digits, and some control characters but lacks the capability to represent characters from other languages.

UTF-8 Encoding

UTF-8 (8-bit Unicode Transformation Format) is a more versatile encoding scheme designed to represent all Unicode characters. It is a variable-length encoding, meaning that the number of bytes used to represent a character can vary from 1 to 4 bytes. UTF-8 is backward compatible with ASCII, as the first 128 characters of Unicode (which correspond to the ASCII characters) are encoded in a single byte, just like in ASCII. However, characters outside this range are encoded in 2, 3, or 4 bytes, depending on their Unicode code point. This variable length allows UTF-8 to efficiently represent a vast array of characters while minimizing storage needs for text primarily composed of ASCII characters.

UTF-16 Encoding

UTF-16 (16-bit Unicode Transformation Format) uses either 2 or 4 bytes to represent each character. In UTF-16, the characters from the Basic Multilingual Plane (BMP), which includes most characters from most languages, are represented as 2 bytes. Characters outside the BMP, such as some less common or historic characters, are represented as 4 bytes using a surrogate pair mechanism. UTF-16 is widely used in many operating systems and applications for its ability to represent a broad range of characters with a fixed length for most cases, though it may not be as storage-efficient as UTF-8 for text that includes many ASCII characters.

| Encoding Scheme | Bytes per Character | Character Set |

|---|---|---|

| ASCII | 1 | 128 characters (English, digits, control characters) |

| UTF-8 | 1-4 | Unicode characters (most languages) |

| UTF-16 | 2 or 4 | Unicode characters (most languages, with surrogates for less common characters) |

Implications for Data Storage and Communication

The byte representation of characters has significant implications for data storage, processing, and communication. For instance, choosing an encoding scheme that efficiently represents the characters used in a particular application or dataset can save storage space and improve data transfer times. On the other hand, using an encoding scheme that is not optimized for the characters being used can lead to inefficiencies and potential errors in data processing.

Data Storage Considerations

In data storage, the choice of encoding scheme directly affects the amount of space required to store text data. For example, using UTF-8 to store English text can be more efficient than using UTF-16 because UTF-8 typically uses fewer bytes per character for ASCII characters. However, for text that includes many characters outside the ASCII range, UTF-16 might offer a more consistent and sometimes more efficient storage solution.

Data Communication Considerations

In data communication, the encoding scheme must be compatible with both the sender’s and receiver’s systems to ensure that data is transmitted correctly. Incompatibilities can lead to character corruption or misinterpretation, which can have serious consequences in certain applications. Standardizing on UTF-8 for web communications has helped mitigate these issues for international text exchange, given its broad support and flexibility.

What is the primary difference between ASCII, UTF-8, and UTF-16 encoding schemes?

+The primary difference lies in the number of bytes used to represent characters and the range of characters that can be represented. ASCII uses 1 byte per character and supports 128 characters, UTF-8 uses 1-4 bytes per character and supports all Unicode characters, and UTF-16 uses 2 or 4 bytes per character and also supports all Unicode characters but with a different representation method.

Why is UTF-8 widely used for web communications?

+UTF-8 is widely used because it is backward compatible with ASCII, efficient for representing a wide range of characters with variable length, and supported by most systems and browsers, making it a versatile and practical choice for international text exchange over the internet.

How does the choice of character encoding affect data storage efficiency?

+The choice of character encoding significantly affects data storage efficiency. Encodings like UTF-8 that use variable lengths can store ASCII characters (common in many texts) in fewer bytes than encodings like UTF-16 that use fixed lengths, potentially reducing storage needs for texts predominantly composed of ASCII characters.

In conclusion, understanding the byte representation of characters in different encoding schemes is essential for efficient data management and communication. By choosing the appropriate encoding scheme based on the specific requirements of an application or dataset, developers and system administrators can optimize storage efficiency, ensure data integrity, and facilitate smooth international communication.