Extracting text from various sources is a crucial task in today's digital age, with applications ranging from data analysis and machine learning to content creation and research. The process involves retrieving specific information or text from documents, images, web pages, or other sources. There are several methods and tools available for extracting text, each suited to different types of sources and purposes. This article will delve into five ways to extract text, discussing the techniques, tools, and considerations for each method.

Key Points

- Optical Character Recognition (OCR) for extracting text from images and scanned documents

- Web scraping for gathering data from websites and web pages

- Manual copying and pasting for simple, small-scale text extraction needs

- Using PDF extraction tools for text extraction from PDF documents

- Automated text extraction software for high-volume and complex extraction tasks

Optical Character Recognition (OCR)

Optical Character Recognition (OCR) is a technology used to convert handwritten, typed, or printed text into digital text that a computer can understand and process. OCR software analyzes the structure and patterns of characters in an image or scanned document to identify and extract the text. This method is particularly useful for extracting text from scanned books, handwritten notes, and images of text. Popular OCR tools include Adobe Acrobat, Readiris, and Tesseract OCR. When using OCR, it’s essential to consider the quality of the source image, as high-quality images with clear text will yield better extraction results.

Web Scraping

Web scraping involves using software or algorithms to navigate a website, locate, and extract specific data, including text. This method is useful for gathering information from websites that do not provide an API (Application Programming Interface) for data access. Web scraping tools like Beautiful Soup, Scrapy, and Selenium can be used to extract text from web pages. However, it’s crucial to respect website terms of use and robots.txt files, which may prohibit web scraping. Additionally, handling anti-scraping measures and ensuring the scraper complies with data protection regulations, such as GDPR, is essential.

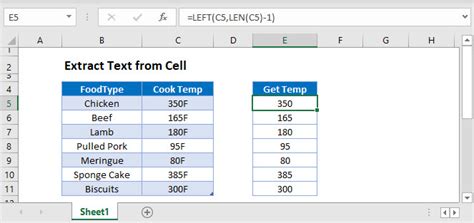

Manual Copying and Pasting

For small-scale text extraction needs, manual copying and pasting is a straightforward method. This involves selecting the text from a source, such as a document, email, or web page, and pasting it into a destination document or application. While this method is simple and effective for small amounts of text, it becomes impractical and time-consuming for large volumes of data. It’s also prone to errors, such as typos or missed sections, especially when dealing with lengthy texts.

PDF Extraction Tools

Portable Document Format (PDF) files are widely used for sharing and viewing documents while preserving their layout. Extracting text from PDFs can be challenging due to the format’s design, which prioritizes visual representation over text accessibility. However, tools like SmallPDF, PDFMiner, and Adobe Acrobat provide functionalities to extract text from PDFs. The effectiveness of these tools can depend on the PDF’s quality and whether it contains scanned images (which might require OCR) or editable text.

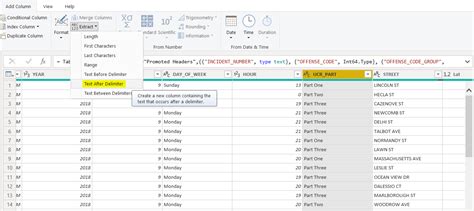

Automated Text Extraction Software

For high-volume and complex text extraction tasks, automated text extraction software is the most efficient solution. These tools can handle various file formats, including documents, emails, and web pages, and can extract specific data based on predefined rules or patterns. Software like Automate, Parseur, and Connotate offers advanced features such as data validation, filtering, and output customization. When selecting an automated text extraction tool, it’s essential to evaluate its compatibility with your specific needs, including the types of sources, the volume of data, and the required accuracy and speed of extraction.

| Extraction Method | Description | Best Use Case |

|---|---|---|

| OCR | Converts images of text into editable text | Scanned documents, handwritten notes |

| Web Scraping | Extracts data from websites | Gathering public data from websites without APIs |

| Manual Copying | Copying text from one source to another | Small amounts of text from easily accessible sources |

| PDF Extraction | Extracts text from PDF files | Documents and reports shared in PDF format |

| Automated Software | Uses predefined rules to extract text from various sources | High-volume extraction tasks requiring precision and speed |

What is the most accurate method for extracting text from images?

+Optical Character Recognition (OCR) is the most accurate method for extracting text from images, especially when using high-quality OCR software and clear source images.

Is web scraping legal?

+The legality of web scraping depends on the terms of service of the website being scraped and the nature of the data being extracted. Always ensure compliance with website policies and relevant laws like GDPR.

How do I choose the best tool for extracting text from PDFs?

+Consider the quality of the PDF, whether it contains scanned images or editable text, and the features you need, such as layout preservation or text editing capabilities, when choosing a PDF extraction tool.