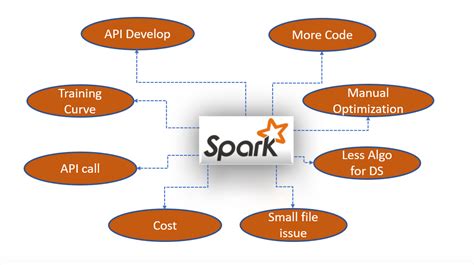

Apache Spark is a powerful, open-source data processing engine that has revolutionized the way we handle big data. However, like any other system, Spark has its limitations, particularly when it comes to startup memory. In this article, we will delve into the world of Spark startup memory limitations, exploring the reasons behind these limitations, their implications, and potential solutions.

Key Points

- Spark's default memory settings can lead to performance issues and errors

- Understanding the Spark configuration properties is crucial for optimizing memory usage

- Increasing the heap size, adjusting the cache size, and optimizing data structures can help alleviate memory limitations

- Monitoring Spark applications and adjusting configurations accordingly is essential for optimal performance

- Best practices, such as using efficient data structures and avoiding unnecessary data caching, can help minimize memory usage

Understanding Spark Memory Configuration

Spark’s memory management is a complex process, involving multiple configuration properties that control how memory is allocated and utilized. The primary configuration properties that affect Spark’s memory usage are spark.driver.memory, spark.executor.memory, and spark.memory.fraction. These properties determine the amount of memory allocated to the driver, executors, and cache, respectively. Understanding these properties and their interplay is essential for optimizing Spark’s memory usage and avoiding startup memory limitations.

Spark Driver Memory

The spark.driver.memory property controls the amount of memory allocated to the Spark driver. The default value is 1g, which can be insufficient for large-scale applications. Increasing the driver memory can help alleviate performance issues and errors caused by insufficient memory. However, it is essential to note that increasing the driver memory can also increase the risk of out-of-memory errors if the driver is not properly configured.

| Configuration Property | Default Value | Description |

|---|---|---|

| spark.driver.memory | 1g | Amount of memory allocated to the Spark driver |

| spark.executor.memory | 1g | Amount of memory allocated to each executor |

| spark.memory.fraction | 0.6 | Fraction of Java heap used for Spark's memory cache |

Optimizing Spark Memory Usage

Optimizing Spark’s memory usage requires a combination of configuration adjustments, data structure optimizations, and best practices. Increasing the heap size, adjusting the cache size, and optimizing data structures can help alleviate memory limitations. Additionally, monitoring Spark applications and adjusting configurations accordingly is essential for optimal performance.

Best Practices for Memory Optimization

Following best practices, such as using efficient data structures, avoiding unnecessary data caching, and optimizing data processing workflows, can help minimize memory usage. For example, using Apache Arrow can help reduce memory usage by storing data in a columnar format. Similarly, using Apache Parquet can help reduce memory usage by storing data in a compressed format.

Another best practice is to use Spark's built-in memory management features, such as spark.memory.unrollFraction and spark.memory.storageFraction. These features allow Spark to dynamically adjust its memory usage based on the available memory, helping to prevent out-of-memory errors.

Monitoring Spark Applications

Monitoring Spark applications is essential for optimal performance and memory usage. Spark provides a range of tools and APIs for monitoring and debugging applications, including the Spark Web UI, Spark REST API, and Spark Logging. These tools provide detailed information about Spark’s memory usage, execution times, and other performance metrics, allowing developers to identify and optimize performance bottlenecks.

What is the default value of spark.driver.memory?

+The default value of spark.driver.memory is 1g.

How can I increase the heap size in Spark?

+You can increase the heap size in Spark by setting the spark.driver.memory property to a higher value, such as 2g or 4g.

What is the purpose of spark.memory.fraction?

+The spark.memory.fraction property controls the fraction of Java heap used for Spark's memory cache. The default value is 0.6.

In conclusion, Spark startup memory limitations can be a significant challenge for developers and data engineers. However, by understanding Spark’s memory configuration properties, optimizing memory usage, and following best practices, developers can alleviate these limitations and ensure optimal performance. By monitoring Spark applications and adjusting configurations accordingly, developers can identify and optimize performance bottlenecks, ensuring that their Spark applications run efficiently and effectively.