As a professional working with big data, you're likely familiar with Databricks, a powerful platform for data engineering, data science, and data analytics. Databricks jobs are a crucial component of this ecosystem, allowing users to run Spark code in a scalable and managed environment. However, maximizing the efficiency and effectiveness of these jobs requires a deep understanding of best practices and optimization techniques. In this article, we'll explore five expert tips for working with Databricks jobs, helping you to streamline your workflow, improve performance, and get the most out of your data processing tasks.

Key Points

- Optimizing cluster configuration for cost and performance

- Implementing efficient data ingestion and processing strategies

- Leveraging Databricks' built-in features for job optimization

- Monitoring and troubleshooting job performance with advanced tools

- Utilizing version control and collaboration features for job management

Understanding Databricks Jobs

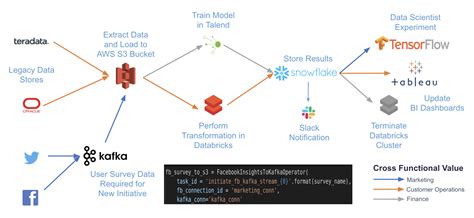

Databricks jobs are the backbone of any data processing pipeline, enabling users to execute Spark code on a managed cluster. These jobs can be used for a wide range of tasks, from data ingestion and transformation to machine learning model training and deployment. To get the most out of Databricks jobs, it’s essential to understand the underlying architecture and how to optimize cluster configuration, data processing, and job execution.

Tip 1: Optimizing Cluster Configuration

One of the most critical factors in determining the performance and cost of Databricks jobs is the cluster configuration. The type and number of nodes, instance types, and autoscaling settings all play a significant role in how efficiently your jobs run. To optimize your cluster configuration, consider the following strategies:

- Right-size your cluster: Ensure that your cluster has the appropriate number of nodes and instance types to handle your workload. Too few nodes can lead to slow job execution, while too many can result in unnecessary costs.

- Use autoscaling: Enable autoscaling to automatically adjust the number of nodes in your cluster based on the workload. This can help reduce costs and improve performance.

- Choose the right instance type: Select instance types that are optimized for your specific workload. For example, if you’re running CPU-intensive jobs, choose instance types with high CPU performance.

| Instance Type | CPU Performance | Memory | Cost |

|---|---|---|---|

| i3.2xlarge | 8 vCPUs | 61 GiB | $0.528 per hour |

| i3.4xlarge | 16 vCPUs | 122 GiB | $1.056 per hour |

| i3.8xlarge | 32 vCPUs | 244 GiB | $2.112 per hour |

Efficient Data Ingestion and Processing

Efficient data ingestion and processing are critical components of any Databricks job. By leveraging optimized data processing strategies and leveraging Databricks’ built-in features, you can significantly improve the performance and efficiency of your jobs. Some key strategies for efficient data ingestion and processing include:

- Using Delta Lake: Delta Lake is a highly performant and scalable data storage format that provides ACID transactions, data versioning, and other advanced features. By using Delta Lake, you can improve the performance and reliability of your data ingestion and processing pipelines.

- Leveraging Databricks’ built-in features: Databricks provides a range of built-in features for optimizing data ingestion and processing, including automatic data optimization, data caching, and advanced data processing algorithms. By leveraging these features, you can simplify your data processing workflows and improve performance.

- Implementing data partitioning: Data partitioning is a critical technique for improving the performance of data processing jobs. By partitioning your data into smaller, more manageable chunks, you can reduce the overhead of data processing and improve overall performance.

Tip 2: Implementing Efficient Data Ingestion Strategies

Efficient data ingestion is critical for any Databricks job, as it can significantly impact the overall performance and efficiency of your data processing pipeline. Some key strategies for efficient data ingestion include:

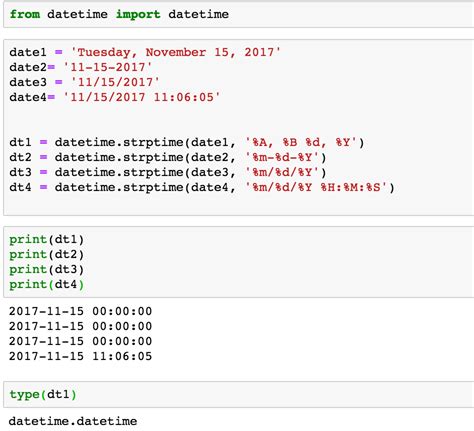

- Using Apache Spark’s built-in data sources: Apache Spark provides a range of built-in data sources that can be used to ingest data from various sources, including files, databases, and messaging systems. By using these data sources, you can simplify your data ingestion workflows and improve performance.

- Implementing data validation and quality checks: Data validation and quality checks are essential for ensuring the accuracy and reliability of your data. By implementing these checks, you can detect and correct data errors early in the data processing pipeline, reducing the risk of downstream errors and improving overall data quality.

- Using data ingestion frameworks: Data ingestion frameworks, such as Apache NiFi and Apache Beam, provide a range of tools and features for simplifying and optimizing data ingestion workflows. By using these frameworks, you can improve the efficiency and reliability of your data ingestion pipelines.

Monitoring and Troubleshooting Job Performance

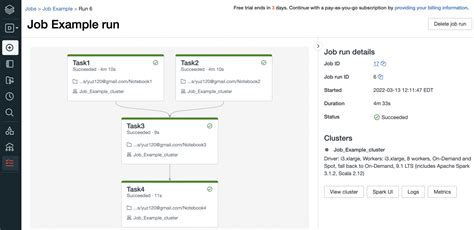

Monitoring and troubleshooting job performance are critical components of any Databricks workflow. By leveraging advanced monitoring and troubleshooting tools, you can quickly identify and resolve issues, improving the overall efficiency and reliability of your jobs. Some key strategies for monitoring and troubleshooting job performance include:

- Using Databricks’ built-in monitoring tools: Databricks provides a range of built-in monitoring tools, including job metrics, cluster metrics, and Spark UI. By using these tools, you can gain real-time insights into job performance and quickly identify issues.

- Leveraging external monitoring tools: External monitoring tools, such as Prometheus and Grafana, provide a range of advanced features for monitoring and troubleshooting job performance. By leveraging these tools, you can gain deeper insights into job performance and improve overall efficiency and reliability.

- Implementing alerting and notification systems: Alerting and notification systems are essential for quickly identifying and resolving issues. By implementing these systems, you can ensure that issues are detected and resolved quickly, minimizing downtime and improving overall efficiency.

Tip 3: Leveraging Databricks’ Built-in Features for Job Optimization

Databricks provides a range of built-in features for optimizing job performance, including automatic data optimization, data caching, and advanced data processing algorithms. By leveraging these features, you can simplify your data processing workflows and improve performance. Some key strategies for leveraging Databricks’ built-in features include:

- Using automatic data optimization: Automatic data optimization is a critical feature for improving job performance. By using this feature, you can ensure that your data is optimized for processing, reducing the overhead of data processing and improving overall performance.

- Implementing data caching: Data caching is a critical technique for improving job performance. By caching frequently accessed data, you can reduce the overhead of data processing and improve overall performance.

- Leveraging advanced data processing algorithms: Advanced data processing algorithms, such as machine learning and deep learning, provide a range of features for improving job performance. By leveraging these algorithms, you can simplify your data processing workflows and improve overall efficiency and reliability.

Utilizing Version Control and Collaboration Features

Version control and collaboration are critical components of any Databricks workflow. By leveraging version control systems, such as Git, and collaboration tools, such as Databricks’ built-in collaboration features, you can improve the efficiency and reliability of your jobs. Some key strategies for utilizing version control and collaboration features include:

- Using version control systems: Version control systems, such as Git, provide a range of features for managing and tracking changes to your code. By using these systems, you can ensure that your code is versioned and tracked, improving overall efficiency and reliability.

- Leveraging collaboration tools: Collaboration tools, such as Databricks’ built-in collaboration features, provide a range of features for simplifying and optimizing collaboration workflows. By leveraging these tools, you can improve the efficiency and reliability of your jobs and ensure that team members are working together effectively.

- Implementing continuous integration and continuous deployment (CI/CD) pipelines: CI/CD pipelines are essential for ensuring that your code is tested, validated, and deployed quickly and reliably. By implementing these pipelines, you can improve the efficiency and reliability of your jobs and ensure that team members are working together effectively.

Tip 4: Monitoring and Troubleshooting Job Performance with Advanced Tools

Monitoring and troubleshooting job performance are critical components of any Databricks workflow. By leveraging advanced monitoring and troubleshooting tools, you can quickly identify and resolve issues, improving the overall efficiency and reliability of your jobs. Some key strategies for monitoring and troubleshooting job performance include:

- Using Databricks’ built-in monitoring tools: Databricks provides a range of built-in monitoring tools, including job metrics, cluster metrics, and Spark UI. By using these tools, you can gain real-time insights into job performance and quickly identify issues.

- Leveraging external monitoring tools: External monitoring tools, such as Prometheus and Grafana, provide a range of advanced features for monitoring and troubleshooting job performance. By leveraging these tools, you can gain deeper insights into job performance and improve overall efficiency and reliability.

- Implementing alerting and notification systems: Alerting and notification systems are essential for quickly identifying and resolving issues. By implementing these systems, you can ensure that issues are detected and resolved quickly, minimizing downtime and improving overall efficiency.

Tip 5: Utilizing Version Control and Collaboration Features for Job Management

Version control and collaboration are critical components of any Databricks workflow. By leveraging version control systems, such as Git, and collaboration tools, such as Databricks’ built-in collaboration features, you can improve the efficiency and reliability of your jobs. Some key strategies for utilizing version control and collaboration features include:

- Using version control systems: Version control systems, such as Git, provide a range of features for managing and tracking changes to your code. By using these systems, you can ensure that your code is versioned and tracked, improving overall efficiency and reliability.

- Leveraging collaboration tools: Collaboration tools, such as Databricks’ built-in collaboration features, provide a range of features for simplifying and optimizing collaboration workflows. By leveraging these tools, you can improve the efficiency and reliability of your jobs and ensure that team members are working together effectively.

- Implementing continuous integration and continuous deployment (CI/CD) pipelines: CI/CD pipelines are essential for ensuring that your code is tested, validated, and deployed quickly and reliably. By implementing these pipelines, you can improve the efficiency and reliability of your jobs and ensure that team members are working together effectively.

What is the best way to optimize cluster configuration for Databricks jobs?

+The best way to optimize cluster configuration for Databricks jobs is to right-size your cluster, use autoscaling, and choose the right instance type. Additionally, consider using Databricks’ built-in features, such as automatic data optimization and data caching, to simplify your data processing workflows and improve performance.

How can I improve the efficiency of my data ingestion pipelines in Databricks?

+You can improve the efficiency of your data ingestion pipelines in Databricks by using Apache Spark’s built-in data sources, implementing data validation and quality checks, and leveraging data ingestion frameworks. Additionally, consider using Databricks’ built-in features, such as automatic data optimization and data caching, to simplify your data processing workflows and improve performance.

What are some best practices for monitoring and troubleshooting job performance in Databricks?

+Some best practices for monitoring and troubleshooting job performance in Databricks include using Databricks’ built-in monitoring tools, leveraging external monitoring tools, and implementing alerting and notification systems. Additionally, consider using version control systems and collaboration tools to improve the efficiency and reliability of your jobs and ensure that team members are working together effectively.